nVidia is a graphics card manufacturer for the IBM PC and its compatibles. Joining the market late they went head to head with ATi Technologies in the gaming video card market, and still do to this day (with ATi now a part of AMD).

After a dismal start with their first graphics chip, the NV1, the company focussed solely on 3D gaming. The follow-up graphics processor, called NV3, gave their card, the RIVA 128 a competitive edge over the then-performance benchmark in 3D, the 3Dfx Voodoo.

nVidia were the first to bring DDR (double data rate) SDRAM to the graphics card market in the form of the GeForce 256, effectively doubling the memory bandwidth their cards could support.

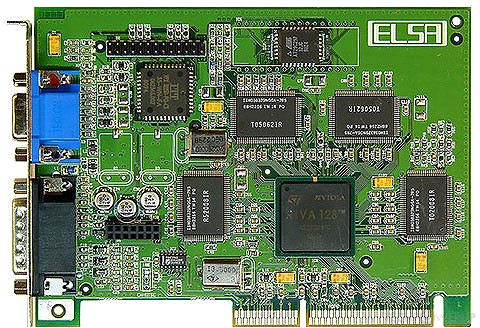

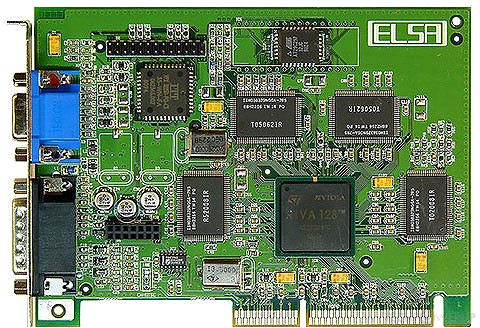

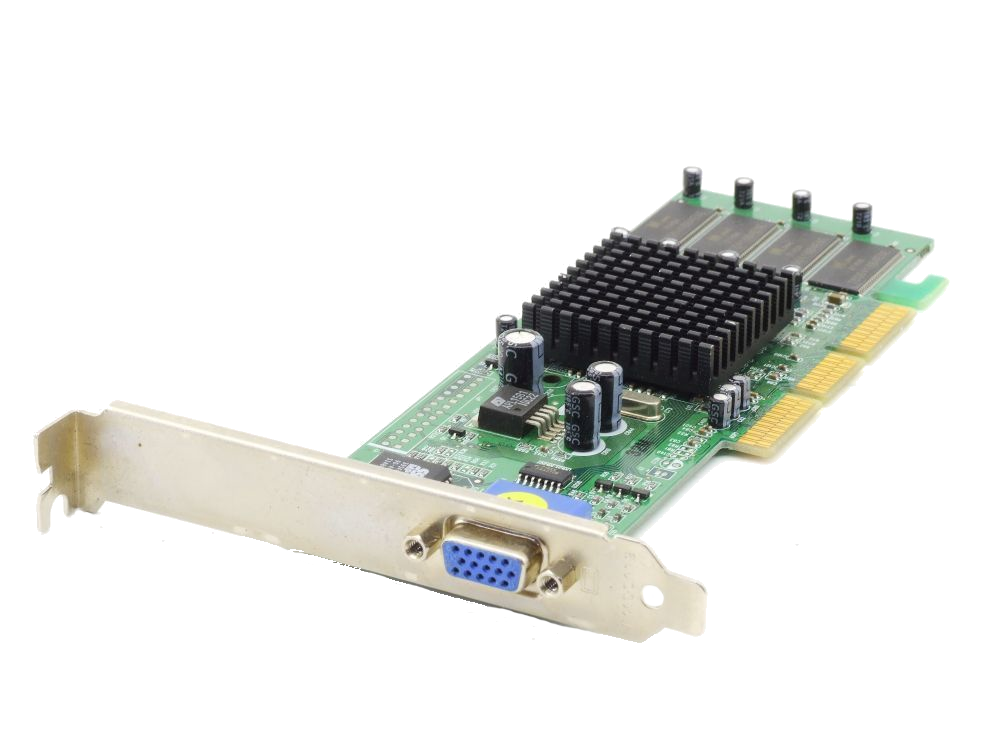

RIVA 128 RIVA 128

Launched: April 1997

Chipset: NV3

Bus: PCI or AGP 2x

Memory: 4 MB (128-bit)

Ports: 15-pin DSUB

DirectX: 5.0

OpenGL: 1.0

Price: ?

The first chip from nVidia was the NV3 graphics processor and arrived in the form of the RiVA 128 in 1997. Just like the ATi 3D Rage II which first arrived the year before, the RiVA 128 had 1 pixel shader, 0 vertex shaders, 1 texture mapping unit and 1 ROP (Raster Operation Pipeline).

nVidia's chip allowed for up to 4 MB of SDRAM memory on a card, which used a 128-bit memory interface. The GPU ran at a frequency of 100 MHz, with memory running at 100 MHz. RiVA 128 was only used on AGP 2x cards.

Rough theoretical performance of the RiVA 128 chip is 100 MPixel/s pixel rate, and 100 MTexel/s texture rate.

Memory bandwidth is approximately 1.6 GB/s.

The RiVA 128 was nVidia's first Direct3D-compatible GPU, supporting DirectX 5.0. RIVA stands for Real-time

Interactive Video and Animation accelerator, and the "128" in the name means this chip has a 128-bit memory bus width. It has a 206 MHz RAMDAC and supports the VESA DDC2 and VBE3 standards. It has all the hardware features for DirectX 5 and also has good OpenGL compatibility. It renders only at 16-bit colour depth. 3D performance is on par with the original 3Dfx Voodoo 1.

OEM'd by Dell (they took the STB Systems rebranded card). This card was also sold as:

The RIVA 128 went head-to-head against the Voodoo 1.

Beware of text fuzziness in DOS on all early nVidia cards up to and including the RIVA 128 - this was due to poor quality RAMDACS. Despite this, it's highly recommended for DOS (no scrolling bugs, mode x works fine and the BIOS is VBE3.0), and in Win98SE can run at 1280x1024. Not sure about 3.1 drivers.

For more details, go to my dedicated page for the RIVA 128 series. |

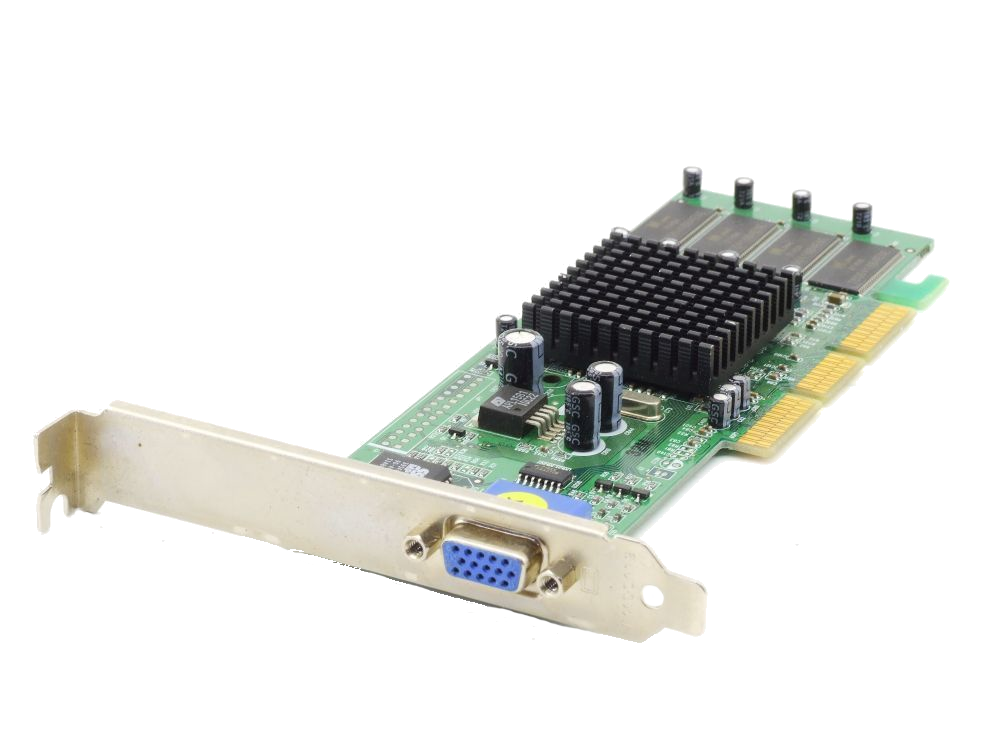

RIVA 128ZX RIVA 128ZX

Launched: 1998

Chipset: NV3

Bus: AGP 1x/2x

Memory: 8 MB

Ports: 15-pin DSUB

DirectX: 5.0

OpenGL: 1.0

Price: ?

The RIVA 128ZX was an upgraded version of the RIVA 128, receiving a 250 MHz RAMDAC and adding support for up to 8 MB SGRAM. Higher resolutions are also supported.

The Riva 128ZX was a graphics card by NVIDIA, launched in February 1998. Built on the 350 nm process, and based on the NV3 graphics processor, in its RIVA 128ZX variant, the card supports DirectX 5.0. The NV3 graphics processor is a relatively small chip with a die area of only 90 mm² and 4 million transistors. It features 1 pixel shaders and 0 vertex shaders, 1 texture mapping units and 1 ROPs. Due to the lack of unified shaders you will not be able to run recent games at all (which require unified shader/DX10+ support). NVIDIA has placed 8 MB SDR memory on the card, which are connected using a 128-bit memory interface. The GPU is operating at a frequency of 100 MHz, memory is running at 100 MHz.

Being a single-slot card, the NVIDIA Riva 128ZX does not require any additional power connector, its power draw is not exactly known. Display outputs include: 1x VGA. Riva 128ZX is connected to the rest of the system using an AGP 2x interface.

Rough theoretical performance of this card is 100 MPixel/s pixel rate, and 100 MTexel/s texture rate. Memory bandwidth is approximately 1.6 GB/s.

This card was OEM'd to Dell who used the STB Systems rebranded card. Also sold as:

Beware of text fuzziness in DOS on all early nVidia cards up to and including the RIVA 128 - poor quality RAMDACS. Despite this, it's highly recommended for DOS (no scrolling bugs, mode x works fine and the BIOS is VBE3.0), Beware of text fuzziness in DOS on all early nVidia cards up to and including the RIVA 128 - poor quality RAMDACS. Despite this, it's highly recommended for DOS (no scrolling bugs, mode x works fine and the BIOS is VBE3.0),

Also be aware that you cannot disable VSYNC in the nVidia drivers - this can therefore give the impression the card is slower when you run performance timings against some of the competition such as the 3dfx Voodoo. If a game supports the disabling of VSYNC, choose this option.

"Pros

Integrated solution - Solid 2D and 3D performance have made the Riva series one of the most often selected parts by hardware manufacturers when building computers.

Decent price - Riva cards now go for around $150-$200 depending on memory configuration and other options.

Cons

Horrible Image Quality - The Riva's performance is gained at the expense of poor color saturation, blurred edges, seaming and dithering.

Weak Performance - Once the leader in Direct3D performance, nVidia's lack of new technology has forced it to play catch-up with other new technologies.

On The Horizon

Developers are talking about nVidia's next chip, the Riva TNT, which will fix image quality issues. With performance that's supposed to exceed that of Voodoo 2 in SLI mode while still providing exceptional 2D performance, nVidia thinks it has the holy grail of 3D cards. This winter, we'll find out when the first TNT boards begin to hit shelves at prices just over $200." PC Accelerator, Issue 1 September 1998

For more details, go to my dedicated page for the RIVA 128 series.

|

RIVA TNT RIVA TNT

Launched: March 1998

Chipset: NV4

Bus: AGP 2x

Core Clock Speed: 90 MHz

Memory: 16 MB

Memory Speed: 110 MHz

Ports: 15-pin DSUB

Price: $200 (Diamond Viper V550)

RIVA TNT was nVidia's fourth graphics chip design (hence the NV4 chipset name) - it was Direct3D 6-compliant with much better image quality and performance compared to its predecessors.

"TNT" stands for TwiN Texel, to point out that the TNT has two rendering pipelines that can work in parallel, equivalent to the two texelfx2 units in the 3Dfx Voodoo 2 chipset. Each pipeline can produce 1 pixel per clock cycle. Until now, only Voodoo (on the Quantum 3D boards only) and Voodoo 2 could do this parallel rendering - now, TNT was the next chip to provide this important feature. ATI's Rage 128 and 3Dlab's Permedia 3 would be hot on their heels.

NVIDIA put 16 MB of SDR memory on the card, which are connected using a 128-bit memory interface. The GPU operated at a frequency of 90 MHz with memory running at 110 MHz. Rough theoretical performance of this card is 180 MPixel/s pixel rate, and 180 MTexel/s texture rate. Memory bandwidth is approximately 1.76 GB/s.

The RIVA TNT has got an excellent 2D engine, producing a great picture quality.

TNT was supposed to be the "Voodoo 2" killer, and whilst that wasn't quite the reality they'd hoped for, the TNT did get a number of benefits including the 2x AGP interface, the ability to display games at 1600 x 1200 resolution (Voodoo 2 was limited to 800 x 600 in single-card form, and 1024 x 768 in SLI mode). TNT also got a 24-bit Z-buffer and 32-bit colour rendering (64 billion colours), which surpasses even the 3dfx Voodoo 3 with its still 16-bit colour palette (65,536 colours). Expect very good 3D performance (close to Voodoo 2), excellent image quality, excellent 2D performance and quality. TNT was supposed to be the "Voodoo 2" killer, and whilst that wasn't quite the reality they'd hoped for, the TNT did get a number of benefits including the 2x AGP interface, the ability to display games at 1600 x 1200 resolution (Voodoo 2 was limited to 800 x 600 in single-card form, and 1024 x 768 in SLI mode). TNT also got a 24-bit Z-buffer and 32-bit colour rendering (64 billion colours), which surpasses even the 3dfx Voodoo 3 with its still 16-bit colour palette (65,536 colours). Expect very good 3D performance (close to Voodoo 2), excellent image quality, excellent 2D performance and quality.

It competed against the S3 Savage3D, Intel i740, 3dfx Voodoo Banshee, Mpact-2, Number Nine Ticket To Ride IV, and Matrox MGA-G200.

"The nVidia RIVA TNT chip powered some of the fastest accelerators in this roundup and is the current 2-D and 3-D speed leader." PC Magazine, December 1998

Key features:

- 0.35 micron technology (8 million transistors)

- 180 Mtexel/s texel fillrate

- 6M triangles/s

- 250 MHz RAMDAC

- 90 MHz core clock

- Maximum resolution of 1600 x 1200

- 24-bit Z-buffer

- 32-bit colour rendering

The RIVA TNT was also sold as:

|

RIVA TNT2 RIVA TNT2

Launched: 1999

Chipset: NV5

Bus: AGP 2x, AGP 4x

Core Clock Speed: 125 MHz

Memory: 16 MB, 32 MB (standard) or 64 MB - all have 128-bit memory bus width

Memory Speed: 150 MHz

- 183 MHz (Ultra)

RAMDAC Speed: 300 MHz

Ports: 15-pin DSUB

Price: £102 ex.VAT (AOpen PA3020 16 MB), £147 ex.VAT (AOpen PA3020 32 MB), £168 ex.VAT (Asus AGP-V3800 Deluxe), £109 ex.VAT (Gigabyte GA-660, Dec '99)

The TNT2 got nVidia's 5th graphics chip design - NV5. It was largely similar to its predecessor, TNT, but added support for AGP 4x and up to 32 MB of video RAM. Additionally, TNT2 was manufactured using a more advanced smaller process resulting in much higher clock speeds and less heat. By the time TNT2 arrived, 3Dfx was really struggling to keep pace.

nVidia also launched the TNT2 Ultra, which is just an overclocked version of the TNT2. It was one of the fastest cards for that era, and will work with Win3.1x with the native TNT drivers. It also had good DOS and Windows 9x support, apart from DOS games that require UniVBE drivers. It competed directly against the award-winning Matrox Millennium G400 Max.

"The [Asus AGP-]V3800 Deluxe is an incredibly fast card - it grabbed top place in six of the 11 tests and managed second position in three others. With performance like this, you'll be able to get the most out of any 3D game." Personal Computer World, December 1999

Full support for 32-bit colour in 3D games as well as a 24-bit Z-buffer. Full support for 32-bit colour in 3D games as well as a 24-bit Z-buffer.

This chipset was a very popular one and OEM'd by many manufacturers:

"The [Diamond] Viper V770 Ultra, utilising nVidia's Riva TNT2 Ultra chipset, is definitely the over achieving member of the Diamond family - although its future is very much in doubt due to S3's recent acquisition of Diamond. The card packs 32 MB of SDRAM, a 300 MHz RAMDAC and an AGP 4x interface. Its 2D performance saw the Viper sitting in the middle of the group, with a similar situation exhibited as we moved onto Direct3D testing at 16bit colour. Increasing the colour depth to 32bit drew better scores from the card, placing it further up the field. The best results were seen during OpenGL performance, with the Viper consistently sitting within the top five cards. The Viper aims to provide raw power and doesn't supply extra features, such as additional outputs or TV tuners." Personal Computer World, December 1999

|

RIVA TNT2 M64 / TNT2 Model 64/ VANTA RIVA TNT2 M64 / TNT2 Model 64/ VANTA

Launched: 1999

Chipset: NV5 B6

Bus: AGP 4x

Core Clock Speed: 100 MHz (M64), 125 MHz (VANTA)

Memory: 16 MB, 32 MB - all have 64-bit memory bus width

Memory Speed: 125 MHz (M64), 143 MHz (VANTA)

Ports: 15-pin DSUB.

Price: £75 ex.VAT (Dec 1999)

M64 and VANTA were budget versions of the TNT2. The nVidia original of this card was also called the GM1000-32. The "M64" means this card has a 64-bit memory width (as opposed to the TNT2's 128-bit memory width). This results in half the memory access performance. Typically the VANTA cards (also 64-bit memory width) run a 100 MHz core clock frequency as well as memory speed, so are even slower than most M64 cards.

The Riva TNT2 M64 was a graphics card by NVIDIA, launched in October 1999. Built on the 250 nm process, and based on the NV5 B6 graphics processor, in its 64 variant, the card supports DirectX 6.0. The NV5 B6 graphics processor is a relatively small chip with a die area of only 90 mm² and 15 million transistors. It features 2 pixel shaders and 0 vertex shaders, 2 texture mapping units and 2 ROPs. Due to the lack of unified shaders you will not be able to run recent games at all (which require unified shader/DX10+ support). NVIDIA has placed 16 MB SDR memory on the card, which are connected using a 64-bit memory interface. The GPU is operating at a frequency of 125 MHz, memory is running at 143 MHz.

Being a single-slot card, the NVIDIA Riva TNT2 M64 does not require any additional power connector, its power draw is not exactly known. Display outputs include: 1x VGA. Riva TNT2 M64 is connected to the rest of the system using an AGP 4x interface.

Rough theoretical performance of this card is 250 MPixel/s pixel rate, and 250 MTexel/s texture rate. Memory bandwidth is approximately 1.144 GB/s. Typically in real gaming performance, the M64 will outperform the original TNT at lower resolutions (320x200, 640x480 or 800x600) but starts to be on par with TNT performance levels at anything higher, due to having a smaller memory bandwidth. You can overclock an M64 card however, so focus this on the memory clock for best performance gains.

Cards with 32 MB of memory helped the TNT2 Model 64 compete in higher-resolution settings, and in late 1999 this would have been a mid-market choice; not the cheapest slowest card around, but also not the fastest, and for under £90 was within reach of many. If you were in the market for an nVidia card but had more cash to throw around you'd instead look at the TNT2 Ultra. Cards with 32 MB of memory helped the TNT2 Model 64 compete in higher-resolution settings, and in late 1999 this would have been a mid-market choice; not the cheapest slowest card around, but also not the fastest, and for under £90 was within reach of many. If you were in the market for an nVidia card but had more cash to throw around you'd instead look at the TNT2 Ultra.

Rebranded versions of the M64 were sold as:

|

GeForce 256 GeForce 256

Launched: 1999

Bus: AGP 4x

Memory: 32 MB of SDR or DDR RAM.

The initial release of nVidia's game-changing card arrived in October 1999, and it came with SDR RAM manufactured by Samsung. In December of that same year, nVidia upgraded GeForce 256 to have DDR RAM provided by Hyundai or Infinion.

nVidia GeForce 256-based cards include:

- ASUS AGP-V6600 Deluxe (SDR memory)

- ASUS AGP-V6800 Deluxe (DDR memory)

- ELSA GLoriaII Quadro SDR

- Guillemot (Hercules) 3D Prophet SDR

- Creative Labs Annihilator CT-6960 (SDR memory)

- Creative Labs Annihilator CT-6970 (DDR memory)

"The GeForce 256 is the first graphics chip to include transform and lighting (T&L) engines in its core design. T&L engines take a huge amount of load away from the CPU since the geometry calculation is performed locally, leaving it free to concentrate on other things. The GeForce 256 also incorporates S3's texture compression, allowing huge textures to be squeezed to a managable size, improving the image quality without a loss of speed. Strangely, nVidia has chosen not to include hardware environment bump mapping on the chip, leaving the Matrox G400 as the only card supporting this DirectX feature.

It's impressive that nVidia is so far ahead of the competition with the GeForce 256, but as with any piece of hardware, it's only as good as the software that runs on it. Unfortunately, there's no software that supports T&L yet, partly due to the fact that DirectX 7 is required. Even the 3DMark benchmarking suite doesn't support T&L yet. That said, T&L is definitely the future of 3D graphics cards, so don't be surprised if every new card released supports this feature." Personal Computer World, December 1999

|